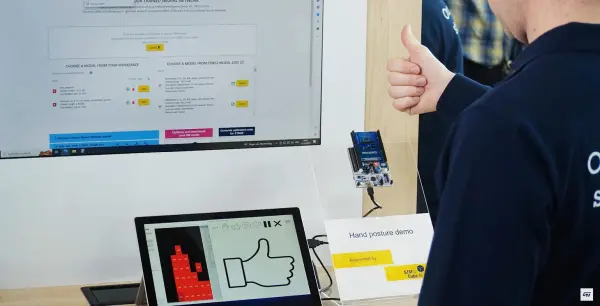

Have you ever imagined commanding a device solely with hand motions? Envision your phone wirelessly sending emojis to friends representing your hand gestures in real-time.

This vision is now a reality! Using ST’s innovative multi-zone Time-of-Flight sensors, camera-free gesture recognition is possible. AI algorithms running on the low-power STM32 microcontroller identify hand poses with minimal processing requirements.

Users can define customized gesture vocabularies, collect training data, build machine learning models, and develop fully-customized applications! Without need for cameras, ST sensors unlock entirely new interactions between people and technology through simple movement of the hands.

Approach

This hand gesture recognition solution identifies a customizable set of hand poses using an ST multi-zone Time-of-Flight sensor.

The development process involves:

Defining your own collection of hand gestures

Collecting training data from multiple users via the ToF sensor’s 8 x 8 distance and signal readings

Employing the training script from ST’s MCU Model Zoo to build an AI model

Implementing the trained model on your selected STM32 MCU using either STM32Cube.AI Dev Cloud or the “Hand Posture Getting Started” included in the Model Zoo

This approach allows for rapid development with configurable gestures, a small memory footprint and low processing requirements.

Depending on the application, the ToF sensor may face the user, ceiling or be attached to a moving object – enabling use in products like personal computers, interactive displays, smart appliances and augmented reality devices.